The question was familiar, but it was the first time a reporter had asked me to go on the record since I left PalmSource. She said, "Given all the uncertainty about Palm, should people avoid buying their products?"

I asked what uncertainty she meant.

"You know, all the uncertainty about what they're doing with Microsoft. It's the same as RIM Blackberry, where people say you shouldn't buy because of the uncertainty about their patents."

I'm glad to say that my answer today is the same as it was back when I worked at PalmSource: buy what you need and don't worry about what people say.

If you look for uncertainty, you can find it for any mobile product on the market. Symbian's losing licensees. Microsoft has lost licensees (and missed a few shipment deadlines). Both platforms are extremely dependant on single hardware companies -- Nokia for Symbian and HTC for Microsoft. And for every device there's always a new model or a new software version about to obsolete the current stuff.

Obviously, if a company's on the verge of bankruptcy, you should be careful. But Palm is profitable, and they know how loyal Palm OS users are. They have a huge financial incentive to keep serving those users as long as the users want to buy.

RIM is a slightly more intimidating issue because you never know what the government might do. One week RIM's on the brink of ruin, the next week the patent office is about to destroy the whole case against them. This sort of unpredictability doesn't encourage innovation and investment, which I thought was the whole point of the patent system. What we have now seems more like playing the lottery. But I don't think it's going to lead to a shutdown of RIM's system. If NTP destroys RIM's business, there won't be anything left to squeeze money out of. I think what you're seeing now is brinksmanship negotiation from both sides. It's entertaining, but not something you should base a purchase decision on.

Yes, you can get yourself worked up about risk on a particular platform if you want to. But that level of risk is miniscule compared to the near certainty you'll be disappointed if you buy a "safe" product that doesn't really do what you need. There's much more diversity in the mobile market than there is in PCs. One brand often isn't a good substitute for another, and a product that's appealing to one person may be repulsive to another.

If you're an individual user, you could easily talk yourself into buying a device you'll hate every day. If you're an IT manager specifying products for your company, you could easily end up deploying products that employees won't use.

The safest thing to do is ignore the commentators and buy the device that best meets your needs.

NTT DoCoMo buys 11.66% of Palm OS. Watch this space.

Posted by

Andy

at

12:30 AM

When I worked at Palm, I was always amazed at how different the mobile market looked in various parts of the world. Although human beings are basically the same everywhere, the mobile infrastructure (key companies, government regulation, relative penetration of PCs, local history) is dramatically different in every country, and so the markets behave very differently. Even within Europe, the use and adoption of mobile technology varies tremendously from country to country.

And then there's Japan, which has its own unique mobile ecosystem that gets almost completely ignored by the rest of the world, even though a lot of the most important mobile trends started there first (cameraphones, for example).

I try to keep tabs on Japan through several websites that report Japanese news in English. Two are Mobile Media Japan and Wireless Watch Japan. They both post English translations of Japanese tech news, and you find all sorts of interesting tidbits that are almost completely beneath the radar in the US.

Case in point: at the end of November, NTT DoCoMo announced that it is raising its ownership of Access Corp from 7.12% of the company's stock to 11.66%, for a price of about $120 million. That's right, the same Access that just bought PalmSource. So DoCoMo, one of the world's most powerful operators, now owns 11.66% of Palm OS and the upcoming Linux product(s). This story got passing mentions on a couple of enthusiast bulletin boards, but I didn't see anything about it anywhere else.

It's possible that the DoCoMo investment has nothing to do with Palm OS or Linux. Access provides the browser for a lot of DoCoMo phones, and it frequently subsidizes suppliers in various ways for custom development. But $120 million is a lot for just customizing a browser…

DoCoMo is a strong supporter of mobile Linux for 3G phones, and you have to assume that Access is in there pitching its upcoming OS. I can just picture the conversation: "You already get the browser from us, why don't we just bundle it with the OS for one nice low fee?" Meanwhile, Panasonic just dropped Symbian and plans to refocus all its phone development on 3G phones and mobile Linux. You have to figure Access is talking to them as well.

I don't think most of the mobile observers in the US and Europe realize how intense the interest is in mobile Linux in Asia. A lot of very large companies are putting heavy investment into it. I'm sure this is why Access was willing to pay more than double PalmSource's market value to buy the company.

It's going to be very interesting to see what Access does to make that investment pay off in 2006.

(PS: In case you're wondering, I have no ties to PalmSource/Access and no motivation to hype their story. I just want folks to understand that the mobile OS wars aren't even close to over.)

And then there's Japan, which has its own unique mobile ecosystem that gets almost completely ignored by the rest of the world, even though a lot of the most important mobile trends started there first (cameraphones, for example).

I try to keep tabs on Japan through several websites that report Japanese news in English. Two are Mobile Media Japan and Wireless Watch Japan. They both post English translations of Japanese tech news, and you find all sorts of interesting tidbits that are almost completely beneath the radar in the US.

Case in point: at the end of November, NTT DoCoMo announced that it is raising its ownership of Access Corp from 7.12% of the company's stock to 11.66%, for a price of about $120 million. That's right, the same Access that just bought PalmSource. So DoCoMo, one of the world's most powerful operators, now owns 11.66% of Palm OS and the upcoming Linux product(s). This story got passing mentions on a couple of enthusiast bulletin boards, but I didn't see anything about it anywhere else.

It's possible that the DoCoMo investment has nothing to do with Palm OS or Linux. Access provides the browser for a lot of DoCoMo phones, and it frequently subsidizes suppliers in various ways for custom development. But $120 million is a lot for just customizing a browser…

DoCoMo is a strong supporter of mobile Linux for 3G phones, and you have to assume that Access is in there pitching its upcoming OS. I can just picture the conversation: "You already get the browser from us, why don't we just bundle it with the OS for one nice low fee?" Meanwhile, Panasonic just dropped Symbian and plans to refocus all its phone development on 3G phones and mobile Linux. You have to figure Access is talking to them as well.

I don't think most of the mobile observers in the US and Europe realize how intense the interest is in mobile Linux in Asia. A lot of very large companies are putting heavy investment into it. I'm sure this is why Access was willing to pay more than double PalmSource's market value to buy the company.

It's going to be very interesting to see what Access does to make that investment pay off in 2006.

(PS: In case you're wondering, I have no ties to PalmSource/Access and no motivation to hype their story. I just want folks to understand that the mobile OS wars aren't even close to over.)

Is Symbian’s ownership a house of cards?

Posted by

Andy

at

12:24 AM

I think it's very likely that recent changes in Symbian-land will open a new chapter in the soap opera over the company's ownership structure. At the end, there will probably either be some significant new Symbian owners, or Nokia will finally be majority owner of the company.

Either development will generate a lot of public discussion, hand-wringing, and general angst, but probably won't make any meaningful difference in the company's behavior and fortunes. It should be entertaining, though. Here's what to watch for.

Symbian is owned by a consortium of mobile phone companies:

Nokia 47.9%

Ericsson 15.6%

SonyEricsson 13.1%

Panasonic 10.5%

Siemens 8.4%

Samsung 4.5%

Psion, the company that invented Symbian OS, used to be a major owner. But last year it pulled out and announced plans to sell its shares to Nokia, which would have ended up owning more than 60% of the company. There was a huge fuss, with many people saying that if Nokia owned more than 50% of the shares, Symbian would no longer be an independent platform but a slave to Nokia's whims. In the end, Psion's shares were split among Nokia, SonyEricsson, Panasonic, and Siemens, with Nokia getting 47.9%. Crisis averted.

But the big fuss was actually kind of a sideshow, because under the rules of Symbian's governance, major initiatives must be approved by 70% of the ownership. In other words, you have to own 70% of the company to bend it totally to your will, and if you have just 30% ownership you can veto any major plan.

Guess what -- Nokia was and remains the only partner with more than 30% ownership. So it already exercises huge influence over what Symbian does. But it won't have full control unless and until it hits 70%. As far as I can tell, what bugged the other Symbian partners was the impression people would get if Nokia owned 50% or more of Symbian, and that's what they were fighting over in 2004.

Fast forward to today. Two of Symbian's owners, companies that played a key role in the rescue mission of 2004, are apparently dropping Symbian OS. Panasonic just dramatically restructured its mobile phone business, focusing solely on 3G phones and stopping all Symbian development efforts in favor of Linux. In mid-2005 Siemens sold its mobile phone division, including its Symbian shares, to Benq. Siemens and Benq were both Symbian licensees, and both have reportedly had serious problems with product delays and poor Symbian sales. There have been persistent rumors in the Symbian community that Benq is dropping the OS, and DigiTimes, a Taiwanese publication that has a history of getting insider stories, just ran an article quoting anonymous sources saying the same thing. For the record, I should let you know that I could not find an official announcement about this by Benq. But the continuing reports and rumors are very ominous.

The implications of all this haven't been discussed much online, but let's face it -- how long will Benq and Panasonic want to remain part owners in an OS they no longer use and that damaged their phone businesses? That means 18.9% of Symbian is likely to be for sale in the near future (if it isn't already).

What will happen?

It's possible that a transfer of ownership will be negotiated behind the scenes, in which case Symbian might avoid another big public debate. That would be much better for the company and its partners, but less fun for people who write weblogs.

Whether the change happens in public or private, I think there are several possibilities for the future ownership structure:

--New owners buy the shares. I think the most likely candidates would be NTT DoCoMo and Fujitsu. DoCoMo is shipping a lot of 3G Symbian phones in Japan, and Fujitsu makes most of them. Sharp is also a candidate, I guess; it's just started offering Symbian phones. I don't know how the other Symbian partners (and other operators) would feel about DoCoMo owning part of the company. My guess is the operators would be profoundly uncomfortable, and would be less willing to carry Symbian phones. So Fujitsu and maybe Sharp are probably the best bets.

--Nokia buys the 18.9%. This would give it just under 70% ownership. In other words, there would be no practical change in Symbian's governance, but the optics would scare a lot of licensees. In particular, SonyEricsson has expressed strong discomfort with Nokia getting more than 50%. In the analyst sales estimates I used to get while at PalmSource, shipments of SonyEricsson's Symbian phones had been pretty much flat for a very long time, and the company doesn't seem anxious to put the OS in a lot more models. Increased Nokia ownership of Symbian might be the last straw that would drive S-E away from the OS completely. In that case Nokia would probably have to step in and buy even more of the company.

--Symbian goes public. For years Symbian employees were told that the company was headed toward a public offering, which generated a lot of excitement among them. But eventually the phone companies refused to surrender their control over Symbian and the IPO talk died out. If Nokia were unwilling to take on even more ownership of Symbian, and other investors could not be found, then I guess it's possible that all the owners might decide they want to cash out. This would be exciting for Symbian employees for a little while, but I think that if Nokia didn't control Symbian it would be less willing to use the OS in the long term. So Symbian might go public just in time to evaporate. Which leads me to the last scenario:

--Nokia dumps Symbian and the whole thing falls apart. I don't think this is likely, but phone industry analysis company ARC Chart estimates that Nokia's currently paying Symbian more than $100 million a year for the privilege of bundling Symbian OS with a lot of its phones, and the more Nokia uses Symbian, the more money it owes. It's clear that Nokia is deeply committed to Series 60, its software layer running on top of Symbian. But Series 60 could (with a huge amount of work) be ported to run on top of something else.

Having lived through a couple of OS migrations at Apple and Palm, I should emphasize that changing your OS is very easy for an analyst to write about and very, very, very hard to do in reality. You spend years (literally) rewriting plumbing rather than innovating. It's not something you do unless you have a lot of incentive.

But saving $100 million a year is a pretty big incentive…

The other question people will ask is what this means to phone buyers. Should you avoid Symbian devices because of this uncertainty? My answer: absolutely not. Buy the device that best meets your needs best, regardless of OS. If you avoided every smartphone that has uncertainty about it, you wouldn't be able to buy anything. I'm going to come back to this subject in a future post.

Either development will generate a lot of public discussion, hand-wringing, and general angst, but probably won't make any meaningful difference in the company's behavior and fortunes. It should be entertaining, though. Here's what to watch for.

Symbian is owned by a consortium of mobile phone companies:

Nokia 47.9%

Ericsson 15.6%

SonyEricsson 13.1%

Panasonic 10.5%

Siemens 8.4%

Samsung 4.5%

Psion, the company that invented Symbian OS, used to be a major owner. But last year it pulled out and announced plans to sell its shares to Nokia, which would have ended up owning more than 60% of the company. There was a huge fuss, with many people saying that if Nokia owned more than 50% of the shares, Symbian would no longer be an independent platform but a slave to Nokia's whims. In the end, Psion's shares were split among Nokia, SonyEricsson, Panasonic, and Siemens, with Nokia getting 47.9%. Crisis averted.

But the big fuss was actually kind of a sideshow, because under the rules of Symbian's governance, major initiatives must be approved by 70% of the ownership. In other words, you have to own 70% of the company to bend it totally to your will, and if you have just 30% ownership you can veto any major plan.

Guess what -- Nokia was and remains the only partner with more than 30% ownership. So it already exercises huge influence over what Symbian does. But it won't have full control unless and until it hits 70%. As far as I can tell, what bugged the other Symbian partners was the impression people would get if Nokia owned 50% or more of Symbian, and that's what they were fighting over in 2004.

Fast forward to today. Two of Symbian's owners, companies that played a key role in the rescue mission of 2004, are apparently dropping Symbian OS. Panasonic just dramatically restructured its mobile phone business, focusing solely on 3G phones and stopping all Symbian development efforts in favor of Linux. In mid-2005 Siemens sold its mobile phone division, including its Symbian shares, to Benq. Siemens and Benq were both Symbian licensees, and both have reportedly had serious problems with product delays and poor Symbian sales. There have been persistent rumors in the Symbian community that Benq is dropping the OS, and DigiTimes, a Taiwanese publication that has a history of getting insider stories, just ran an article quoting anonymous sources saying the same thing. For the record, I should let you know that I could not find an official announcement about this by Benq. But the continuing reports and rumors are very ominous.

The implications of all this haven't been discussed much online, but let's face it -- how long will Benq and Panasonic want to remain part owners in an OS they no longer use and that damaged their phone businesses? That means 18.9% of Symbian is likely to be for sale in the near future (if it isn't already).

What will happen?

It's possible that a transfer of ownership will be negotiated behind the scenes, in which case Symbian might avoid another big public debate. That would be much better for the company and its partners, but less fun for people who write weblogs.

Whether the change happens in public or private, I think there are several possibilities for the future ownership structure:

--New owners buy the shares. I think the most likely candidates would be NTT DoCoMo and Fujitsu. DoCoMo is shipping a lot of 3G Symbian phones in Japan, and Fujitsu makes most of them. Sharp is also a candidate, I guess; it's just started offering Symbian phones. I don't know how the other Symbian partners (and other operators) would feel about DoCoMo owning part of the company. My guess is the operators would be profoundly uncomfortable, and would be less willing to carry Symbian phones. So Fujitsu and maybe Sharp are probably the best bets.

--Nokia buys the 18.9%. This would give it just under 70% ownership. In other words, there would be no practical change in Symbian's governance, but the optics would scare a lot of licensees. In particular, SonyEricsson has expressed strong discomfort with Nokia getting more than 50%. In the analyst sales estimates I used to get while at PalmSource, shipments of SonyEricsson's Symbian phones had been pretty much flat for a very long time, and the company doesn't seem anxious to put the OS in a lot more models. Increased Nokia ownership of Symbian might be the last straw that would drive S-E away from the OS completely. In that case Nokia would probably have to step in and buy even more of the company.

--Symbian goes public. For years Symbian employees were told that the company was headed toward a public offering, which generated a lot of excitement among them. But eventually the phone companies refused to surrender their control over Symbian and the IPO talk died out. If Nokia were unwilling to take on even more ownership of Symbian, and other investors could not be found, then I guess it's possible that all the owners might decide they want to cash out. This would be exciting for Symbian employees for a little while, but I think that if Nokia didn't control Symbian it would be less willing to use the OS in the long term. So Symbian might go public just in time to evaporate. Which leads me to the last scenario:

--Nokia dumps Symbian and the whole thing falls apart. I don't think this is likely, but phone industry analysis company ARC Chart estimates that Nokia's currently paying Symbian more than $100 million a year for the privilege of bundling Symbian OS with a lot of its phones, and the more Nokia uses Symbian, the more money it owes. It's clear that Nokia is deeply committed to Series 60, its software layer running on top of Symbian. But Series 60 could (with a huge amount of work) be ported to run on top of something else.

Having lived through a couple of OS migrations at Apple and Palm, I should emphasize that changing your OS is very easy for an analyst to write about and very, very, very hard to do in reality. You spend years (literally) rewriting plumbing rather than innovating. It's not something you do unless you have a lot of incentive.

But saving $100 million a year is a pretty big incentive…

The other question people will ask is what this means to phone buyers. Should you avoid Symbian devices because of this uncertainty? My answer: absolutely not. Buy the device that best meets your needs best, regardless of OS. If you avoided every smartphone that has uncertainty about it, you wouldn't be able to buy anything. I'm going to come back to this subject in a future post.

Quick notes: a computing radio show, and custom shoes on the web

Posted by

Andy

at

10:10 PM

Computer Outlook is a syndicated radio program that covers various computing topics (it's also streamed over the Internet, so you can hear it by going to the website). They did a boradcast live from the last PalmSource developer conference, and I had a nice time talking with them at the end of the conference. Last month they asked me to come on the show again. We had fun talking about various topics, mostly mobility-related. They've posted a recording of the program here.

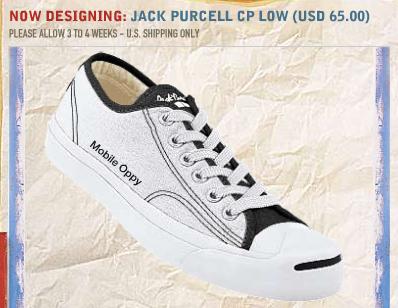

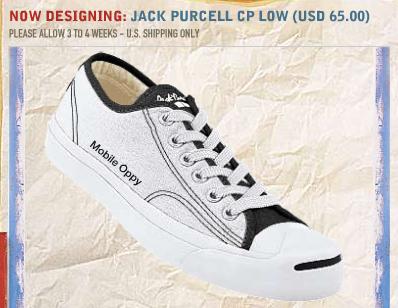

This second item has only the most tenuous connection to mobile computing, but I'm posting it anyway because I think it's cool. You can now design your own Jack Purcells tennis shoes online. The revolutionary importance of this is probably going to be lost on...well, just about everyone reading this, so let me give you a little context. Jack Purcells are tennis shoes that first became famous in the 1930s because they were endorsed by a famous badminton player, Jack Purcell. (Why they're not called badminton shoes, I don't know.) The design has barely changed since then, and today they are just about the most primitive tennis shoes you'll ever see, basically a flat slab of rubber with stitched canvas glued on top. When I was a kid, they were quite popular, and there's a famous photo of James Dean wearing a pair. Gnarly. Unfortunately, in the 1980s, with the rise of sophisticated shoes from Nike and others, Jack Purcells almost completely disappeared from the market.

And yet they never quite completely disappeared. Sometime in the 1990s they became a hot ticket in the Hip Hop community. The Urban Dictionary put it best: "Eternally hip and understated, this is the maverick shoe of simplicity. Its design is has been virtually unchanged since the 1930's. It is a clean and bold casual court shoe and its subtleness has transcended time....Converse All-Stars are cool...but the coolest people on the planet wear Jack Purcells."

The coolest people on the planet, okay?

In an ironic twist, Nike bought the Converse and Jack Purcells brands a couple of years and has been putting a lot of investment into them. The most interesting thing Nike has done is its online shoe design engine, which lets you custom-design your own pair of Jack Purcells. You can pick the colors for everything from the rubber sole to the stitching in the canvas, and they'll also monogram the shoes for you.

Here's the shoe I designed:

If this were the Long Tail blog, I'd wax rhapsodic about how the Web lets individuals get exactly the shoes they want. But I'll leave that to others. I do think it's interesting that there's a customizer for Nike shoes as well, but to me it's a lot less appealing because Nikes are so diverse already. The fun thing about custom Jack Purcells is that there's a single well-understood design that you get to do your own riff on. It's kind of like customizing a '67 Mustang.

If you want to create your own Jacks, follow this link and hover over "Design your own." In addition to having fun with the shoes, you'll be exposed to one of the nicest animated sites I've seen on the Web. And that's really why I posted about this. Although the shoes themselves are fun, what I admire most is how Nike used the Web to make the world's most primitive tennis shoes feel cutting edge.

This second item has only the most tenuous connection to mobile computing, but I'm posting it anyway because I think it's cool. You can now design your own Jack Purcells tennis shoes online. The revolutionary importance of this is probably going to be lost on...well, just about everyone reading this, so let me give you a little context. Jack Purcells are tennis shoes that first became famous in the 1930s because they were endorsed by a famous badminton player, Jack Purcell. (Why they're not called badminton shoes, I don't know.) The design has barely changed since then, and today they are just about the most primitive tennis shoes you'll ever see, basically a flat slab of rubber with stitched canvas glued on top. When I was a kid, they were quite popular, and there's a famous photo of James Dean wearing a pair. Gnarly. Unfortunately, in the 1980s, with the rise of sophisticated shoes from Nike and others, Jack Purcells almost completely disappeared from the market.

And yet they never quite completely disappeared. Sometime in the 1990s they became a hot ticket in the Hip Hop community. The Urban Dictionary put it best: "Eternally hip and understated, this is the maverick shoe of simplicity. Its design is has been virtually unchanged since the 1930's. It is a clean and bold casual court shoe and its subtleness has transcended time....Converse All-Stars are cool...but the coolest people on the planet wear Jack Purcells."

The coolest people on the planet, okay?

In an ironic twist, Nike bought the Converse and Jack Purcells brands a couple of years and has been putting a lot of investment into them. The most interesting thing Nike has done is its online shoe design engine, which lets you custom-design your own pair of Jack Purcells. You can pick the colors for everything from the rubber sole to the stitching in the canvas, and they'll also monogram the shoes for you.

Here's the shoe I designed:

If this were the Long Tail blog, I'd wax rhapsodic about how the Web lets individuals get exactly the shoes they want. But I'll leave that to others. I do think it's interesting that there's a customizer for Nike shoes as well, but to me it's a lot less appealing because Nikes are so diverse already. The fun thing about custom Jack Purcells is that there's a single well-understood design that you get to do your own riff on. It's kind of like customizing a '67 Mustang.

If you want to create your own Jacks, follow this link and hover over "Design your own." In addition to having fun with the shoes, you'll be exposed to one of the nicest animated sites I've seen on the Web. And that's really why I posted about this. Although the shoes themselves are fun, what I admire most is how Nike used the Web to make the world's most primitive tennis shoes feel cutting edge.

"Software as a service" misses the point

Posted by

Andy

at

3:44 PM

At the end of October, Microsoft's Ray Ozzie and Bill Gates wrote internal memos announcing that Microsoft must pursue software services. The memos were leaked to the public, I believe intentionally. They drove enormous press coverage of Microsoft's plans, and of the services business model in general.

Most of the coverage focused on two aspects of software as services: downloading software on demand rather than pre-installing it; and paying for it through advertising rather than retail purchase.

Here are two examples of the coverage. The first is from The Economist:

"At heart, said Mr Ozzie, Web 2.0 is about 'services' (ranging from today's web-based e-mail to tomorrow's web-based word processor) delivered over the web without the need for users to install complicated software on their own computers. With a respectful nod to Google, the world's most popular search engine and Microsoft's arch-rival, Mr Ozzie reminded his colleagues that such services will tend to be free—ie, financed by targeted online advertising as opposed to traditional software-licence fees."

Meanwhile, the New York Times wrote, "if Microsoft shrewdly devises, for example, online versions of its Office products, supported by advertising or subscription fees, it may be a big winner in Internet Round 2."

I respect the Times and love the Economist, but in this case I think they have missed the point, as have most of the other media commenting on the situation. The advertising business model is important to the Microsoft vs. Google story because ads are where Google makes a lot of revenue, and Microsoft wants that money. But the really transformative thing happening in software right now isn't the move to a services business model, it's the move to an atomized development model. The challenge extends far beyond Microsoft. I think most of today's software companies could survive a move to advertising, but the change in development threatens to obsolete almost everything, the same way the graphical interface wiped out most of the DOS software leaders.

The Old Dream is reborn

The idea of component software has been around for a long time. I was first seduced by it in the mid 1990s, when I was at Apple. One of the more interesting projects under development there at the time was something called OpenDoc. In typical Apple fashion, different people had differing visions on what OpenDoc was supposed to become. Some saw it as primarily a compound document architecture -- a better way to mix multiple types of content in a single document. Other folks, including me, wanted it to grow into a more generalized model for creating component software -- breaking big software programs down into a series of modules that could be mixed and matched, like Lego blocks.

For example, if you didn't like the spell-checker built into your word processor, you could buy a new one and plug it in. Don't like the way the program handles footnotes? Plug in a new module. And so on.

The benefit was supposed to be much faster innovation (because pieces of an app could be revised independently), and a market structure that encouraged small developers to build on each others' work. Unfortunately, like many other things Apple did in the 1990s, OpenDoc was never fully implemented and it faded away.

But the dream of components as a better way to build software has remained. Microsoft implemented part of it in its .Net architecture -- companies can develop software using modules that are mixed and matched to create applications rapidly. But the second part of the component dream, an open marketplace for mixing and matching software modules on the fly, never happened on the desktop. So the big burst in software innovation that we wanted to drive never happened either. Until recently.

The Internet is finally bringing the old component software dream to fruition. Many of the best new Internet applications and services look like integrated products, but are actually built up of components. For example, Google Maps consists of a front end that Google created, running on top of a mapping database created by a third party and exposed over the Internet as a service. Google is in turn enabling companies to build more specialized services on top of its mapping engine.

WordPress is my favorite blogging tool in part because of the huge array of third party plug-ins and templates for it. Worried about comment spam? There are several nice plug-ins to fight it. Want a different look for your blog? Just download a new template.

You have to be a bit technical to make it all work, but the learning curve's not steep (hey, I did it). For people who are technical, the explosion of mix and match software and tools on the web is an incredible productivity multiplier. I had lunch recently with a friend who switched from mobile software development to web services in part because he could get so much more done in the web world. To create a new service, he could download an open source version of a baseline service, make a few quick changes to it, add some modules from other developers, and have a prototype product up and running within a couple of weeks. That same sort of development in the traditional software world would have taken a large team of people and months of work.

This feeling of empowerment is enough to make a good programmer giddy. I think that accounts for some of the inflated rhetoric you see around Web 2.0 -- it's the spill-over from a lot of bright people starting to realize just how powerful their new tools really are. I think it's also why less technical analysts have a hard time understanding all the fuss over Web 2.0. The programmers are like carpenters with a shop full of shiny new drills and saws. They're picturing all the cool furniture they can build. But the rest of us say, "so what, it's a drill." We won't get it until more of the furniture is built.

The other critical factor in the rise of this new software paradigm is open source. When I was scheming about OpenDoc, I tried to figure out an elaborate financial model in which developers could be paid a few dollars a copy for each of their modules, with Apple or somebody else acting as an intermediary. It was baroque and probably impractical, but I thought it was essential because I never imagined that people might actually develop software modules for free.

OpenSource gets us past the whole component payment bottleneck. Instead of getting paid for each module, developers share a pool of basic tools that they can use to assemble their own projects quickly, and they focus on just getting paid for those projects. For the people who know how to work this way, the benefits far outweigh the cost of sharing some of your work.

The Rise of the Mammals

Last week I talked with Carl Zetie, a senior analyst at Forrester Research. Carl is one of the brightest analysts I know (I'd point you to his blog rather than his bio, but unfortunately Forrester doesn't let its employees blog publicly).

Carl watches the software industry very closely, and he has a great way of describing the change in paradigm. He sees the world of software development breaking into two camps:

The old paradigm: Large standard applications. This group focuses on the familiar world of APIs and operating systems, and creates standalone, integrated, feature-complete applications.

The new paradigm: Solutions that are built up out of small atomized software modules. APIs don't matter very much here because the modules communicate through metadata. This group changes standards promiscuously (they can be swapped in and out because the changes are buffered by the use of metadata). Carl cited the development tool Eclipse as a great example of this world; the tool itself can be modified and adapted ad hoc.

I think the second group is going to displace the first group, because the second group can innovate so much faster. It'll take years to play itself out, but it's like the mice vs. the dinosaurs, only this time the mice don't need an asteroid to give them a head start.

This situation is very threatening for the established software companies. Almost all of the big ones are based on old-style development, using large teams of programmers to create ponderous software programs with every feature you could imagine. The scale of their products alone has been a huge barrier to entry -- you'd have to duplicate all the features of a PowerPoint or an Illustrator before you could even begin to attack it. Few companies can afford that sort of up-front investment.

But the component paradigm, combined with open source, turns that whole situation on its head. The heavy features of a big software program become a liability -- because the program's so complex, you have to do an incredible amount of testing anytime you add a new feature. The bigger the program becomes, the more testing you have to do. Innovation gets slower and slower. Meanwhile, the component guys can sprint ahead. Their first versions are usually buggy and incomplete, but they improve steadily over time. Because their software is more loosely coupled, they can swap modules without rewriting everything else. If one module turns out to be bad, they just toss it out and use something else.

There are drawbacks to the component approach, of course. For mission-critical applications that require absolute reliability, something composed of modules from various vendors is scary. Support can also be a problem -- when an application breaks, how do you determine which component is at fault? And it's hard (almost laughable at this point) to picture a major desktop application replaced by today's generation of online modules and services. The ones I've seen are far too primitive to displace a mainstream desktop app today.

But I think the potential is there. The online component crowd is systematically working through the problems, and if you project out their progress for five years or so, I think there come a time when their more rapid innovation will outweigh the integration advantages of traditional monolithic software. Components are already winning in online consumer services (that's where most of the Web 2.0 crowd is feeding today), and there are some important enterprise products. Over time I think the component products will eat their way up into enterprise and desktop productivity apps.

In this context, the fuss about software you can download on the fly, and support through advertising, is a sideshow. For many classes of apps it will be faster to use locally cached software for a long time to come, and I don't know if advertising in a productivity application will ever make much sense. But I'm certain that the change in development methodology will reshape the software industry. The real game to watch isn't ad-supported services vs. packaged software, it's atomized development vs. monolithic development.

What does it all mean?

I think this has several very important implications for the industry:

The big established software companies are at risk. The new development paradigm is a horrible challenge for them, because it requires a total change in the way they create and manage their products. Historically, most computing companies haven't survived a transition of this magnitude, and much of the software industry doesn't even seem to be fully aware of what's coming. For example, I recently saw a short note in a very prominent software newsletter, regarding Ruby on Rails (an open source web application framework, one of the darlings of the Web 2.0 crowd). "It's yet another Internet scripting language," the newsletter wrote. "We don't know if it's important. Here are some links so you can decide for yourself."

I guess I have to congratulate them for writing anything, but what they did was kind of like saying, "here's a derringer pistol, Mr. Lincoln. Don't know if it's important or not, but you might want to read about it."

Some software companies are trying to react. I believe the wrenching re-organization that Adobe's putting itself through right now is in part a reaction to this change in the market. The re-org hasn't gotten much coverage -- in part because Adobe hasn't released many details, and in part because the press is obsessed with Google vs. Microsoft. But Adobe has now put Macromedia general managers in charge of most of its business units, displacing a lot of long-time Adobe veterans who are very bitter about being ousted two weeks before Christmas, despite turning in good profits. I've been getting messages from friends at Adobe who have been laid off recently, and all of them say they were pushed aside for Macromedia employees. "It's a reverse acquisition," one friend told me.

I personally think what Adobe's doing is grafting Macromedia's Internet knowledge and reflexes into a company that has been very focused on its successful packaged software franchises. It's going to be a painful integration process, but the fact that Adobe's willing to put itself through this tells you how important the change is. Better to go through agonizing change now than to lose the whole company in five years.

What does Microsoft do? In the old days, Microsoft's own extreme profitability made it straightforward for the company to win a particular market. Microsoft could spend money to bleed the competitor (for example, give away the browser vs. Netscape), while it worked behind the scenes to duplicate and then surpass the competitor's product. But the component software crowd doesn't want to take over Microsoft's revenue stream; it wants to destroy about 90% of it, and then can be very successful living off the remaining 10% or so. To co-opt their tactics, Microsoft would have to destroy most of its own revenue.

Here's a simplified example of what I mean: some of the component companies are developing competitors to the Office apps. A couple of examples are Writely and JotSpot's Tracker. Microsoft could fight them by trimming down Word and Excel into lightweight frameworks and inviting developers to extend them. The trouble is that you can't charge a traditional Word or Excel price for a basic framework; if you do, competing frameworks will beat you on price. And if you enable third parties to make the extensions, then they'll get any revenue that comes from extensions. I don't see how Microsoft could sell enough advertising on a Word service to make up the couple of hundred dollars in gross margin per user that it gets today from Office (and that it gets to collect again every time it upgrades Office).

The Ozzie memo seems to suggest that Microsoft will try to integrate across its products, to make them complete and interoperable in ways that will be very hard for the component crowd to copy. But that adds even more complexity to Microsoft's development process, which is already becoming famous for slowness. If you gather all the dinosaurs together into a herd, that doesn't stop the mice from eating their eggs.

I wonder if senior management at Microsoft sees the scenario this starkly. If so, a logical approach might be to make an all-out push to displace the Google search engine and take over all of Google's advertising business, to offset the coming loss of applications revenue. Will Microsoft suddenly offer to install free WiFi in every city in the world? Don't laugh; historically, when Microsoft felt truly threatened it was willing to take radical action. Years ago the standard assumption was that Gates and Ballmer utterly controlled Microsoft -- they held enough of the company's stock that they could ignore the other shareholders if they had to. I'm not sure if that's still true. Together the stock holdings of Gates and Ballmer have dropped to about 13% of the company. Microsoft execs hold another 14%, and Paul Allen has about 1%. Taken together, that's 28%. Is that enough to let company management make radical moves, even at the expense of short-term profits? I don't know. But I wouldn't bet against it.

The rebirth of IT? The other interesting potential impact was pointed out to me by a co-worker at Rubicon Consulting, Bruce La Fetra. Just as new software companies can become more efficient by working in the component world, companies can gain competitive advantage by aggressively using the new online services and open source components for their own in-house development. But doing so requires careful integration and support on the part of the IT staff. In other words, the atomization of software makes having a great IT department a competitive advantage.

How about that, maybe IT does matter after all.

Most of the coverage focused on two aspects of software as services: downloading software on demand rather than pre-installing it; and paying for it through advertising rather than retail purchase.

Here are two examples of the coverage. The first is from The Economist:

"At heart, said Mr Ozzie, Web 2.0 is about 'services' (ranging from today's web-based e-mail to tomorrow's web-based word processor) delivered over the web without the need for users to install complicated software on their own computers. With a respectful nod to Google, the world's most popular search engine and Microsoft's arch-rival, Mr Ozzie reminded his colleagues that such services will tend to be free—ie, financed by targeted online advertising as opposed to traditional software-licence fees."

Meanwhile, the New York Times wrote, "if Microsoft shrewdly devises, for example, online versions of its Office products, supported by advertising or subscription fees, it may be a big winner in Internet Round 2."

I respect the Times and love the Economist, but in this case I think they have missed the point, as have most of the other media commenting on the situation. The advertising business model is important to the Microsoft vs. Google story because ads are where Google makes a lot of revenue, and Microsoft wants that money. But the really transformative thing happening in software right now isn't the move to a services business model, it's the move to an atomized development model. The challenge extends far beyond Microsoft. I think most of today's software companies could survive a move to advertising, but the change in development threatens to obsolete almost everything, the same way the graphical interface wiped out most of the DOS software leaders.

The Old Dream is reborn

The idea of component software has been around for a long time. I was first seduced by it in the mid 1990s, when I was at Apple. One of the more interesting projects under development there at the time was something called OpenDoc. In typical Apple fashion, different people had differing visions on what OpenDoc was supposed to become. Some saw it as primarily a compound document architecture -- a better way to mix multiple types of content in a single document. Other folks, including me, wanted it to grow into a more generalized model for creating component software -- breaking big software programs down into a series of modules that could be mixed and matched, like Lego blocks.

For example, if you didn't like the spell-checker built into your word processor, you could buy a new one and plug it in. Don't like the way the program handles footnotes? Plug in a new module. And so on.

The benefit was supposed to be much faster innovation (because pieces of an app could be revised independently), and a market structure that encouraged small developers to build on each others' work. Unfortunately, like many other things Apple did in the 1990s, OpenDoc was never fully implemented and it faded away.

But the dream of components as a better way to build software has remained. Microsoft implemented part of it in its .Net architecture -- companies can develop software using modules that are mixed and matched to create applications rapidly. But the second part of the component dream, an open marketplace for mixing and matching software modules on the fly, never happened on the desktop. So the big burst in software innovation that we wanted to drive never happened either. Until recently.

The Internet is finally bringing the old component software dream to fruition. Many of the best new Internet applications and services look like integrated products, but are actually built up of components. For example, Google Maps consists of a front end that Google created, running on top of a mapping database created by a third party and exposed over the Internet as a service. Google is in turn enabling companies to build more specialized services on top of its mapping engine.

WordPress is my favorite blogging tool in part because of the huge array of third party plug-ins and templates for it. Worried about comment spam? There are several nice plug-ins to fight it. Want a different look for your blog? Just download a new template.

You have to be a bit technical to make it all work, but the learning curve's not steep (hey, I did it). For people who are technical, the explosion of mix and match software and tools on the web is an incredible productivity multiplier. I had lunch recently with a friend who switched from mobile software development to web services in part because he could get so much more done in the web world. To create a new service, he could download an open source version of a baseline service, make a few quick changes to it, add some modules from other developers, and have a prototype product up and running within a couple of weeks. That same sort of development in the traditional software world would have taken a large team of people and months of work.

This feeling of empowerment is enough to make a good programmer giddy. I think that accounts for some of the inflated rhetoric you see around Web 2.0 -- it's the spill-over from a lot of bright people starting to realize just how powerful their new tools really are. I think it's also why less technical analysts have a hard time understanding all the fuss over Web 2.0. The programmers are like carpenters with a shop full of shiny new drills and saws. They're picturing all the cool furniture they can build. But the rest of us say, "so what, it's a drill." We won't get it until more of the furniture is built.

The other critical factor in the rise of this new software paradigm is open source. When I was scheming about OpenDoc, I tried to figure out an elaborate financial model in which developers could be paid a few dollars a copy for each of their modules, with Apple or somebody else acting as an intermediary. It was baroque and probably impractical, but I thought it was essential because I never imagined that people might actually develop software modules for free.

OpenSource gets us past the whole component payment bottleneck. Instead of getting paid for each module, developers share a pool of basic tools that they can use to assemble their own projects quickly, and they focus on just getting paid for those projects. For the people who know how to work this way, the benefits far outweigh the cost of sharing some of your work.

The Rise of the Mammals

Last week I talked with Carl Zetie, a senior analyst at Forrester Research. Carl is one of the brightest analysts I know (I'd point you to his blog rather than his bio, but unfortunately Forrester doesn't let its employees blog publicly).

Carl watches the software industry very closely, and he has a great way of describing the change in paradigm. He sees the world of software development breaking into two camps:

The old paradigm: Large standard applications. This group focuses on the familiar world of APIs and operating systems, and creates standalone, integrated, feature-complete applications.

The new paradigm: Solutions that are built up out of small atomized software modules. APIs don't matter very much here because the modules communicate through metadata. This group changes standards promiscuously (they can be swapped in and out because the changes are buffered by the use of metadata). Carl cited the development tool Eclipse as a great example of this world; the tool itself can be modified and adapted ad hoc.

I think the second group is going to displace the first group, because the second group can innovate so much faster. It'll take years to play itself out, but it's like the mice vs. the dinosaurs, only this time the mice don't need an asteroid to give them a head start.

This situation is very threatening for the established software companies. Almost all of the big ones are based on old-style development, using large teams of programmers to create ponderous software programs with every feature you could imagine. The scale of their products alone has been a huge barrier to entry -- you'd have to duplicate all the features of a PowerPoint or an Illustrator before you could even begin to attack it. Few companies can afford that sort of up-front investment.

But the component paradigm, combined with open source, turns that whole situation on its head. The heavy features of a big software program become a liability -- because the program's so complex, you have to do an incredible amount of testing anytime you add a new feature. The bigger the program becomes, the more testing you have to do. Innovation gets slower and slower. Meanwhile, the component guys can sprint ahead. Their first versions are usually buggy and incomplete, but they improve steadily over time. Because their software is more loosely coupled, they can swap modules without rewriting everything else. If one module turns out to be bad, they just toss it out and use something else.

There are drawbacks to the component approach, of course. For mission-critical applications that require absolute reliability, something composed of modules from various vendors is scary. Support can also be a problem -- when an application breaks, how do you determine which component is at fault? And it's hard (almost laughable at this point) to picture a major desktop application replaced by today's generation of online modules and services. The ones I've seen are far too primitive to displace a mainstream desktop app today.

But I think the potential is there. The online component crowd is systematically working through the problems, and if you project out their progress for five years or so, I think there come a time when their more rapid innovation will outweigh the integration advantages of traditional monolithic software. Components are already winning in online consumer services (that's where most of the Web 2.0 crowd is feeding today), and there are some important enterprise products. Over time I think the component products will eat their way up into enterprise and desktop productivity apps.

In this context, the fuss about software you can download on the fly, and support through advertising, is a sideshow. For many classes of apps it will be faster to use locally cached software for a long time to come, and I don't know if advertising in a productivity application will ever make much sense. But I'm certain that the change in development methodology will reshape the software industry. The real game to watch isn't ad-supported services vs. packaged software, it's atomized development vs. monolithic development.

What does it all mean?

I think this has several very important implications for the industry:

The big established software companies are at risk. The new development paradigm is a horrible challenge for them, because it requires a total change in the way they create and manage their products. Historically, most computing companies haven't survived a transition of this magnitude, and much of the software industry doesn't even seem to be fully aware of what's coming. For example, I recently saw a short note in a very prominent software newsletter, regarding Ruby on Rails (an open source web application framework, one of the darlings of the Web 2.0 crowd). "It's yet another Internet scripting language," the newsletter wrote. "We don't know if it's important. Here are some links so you can decide for yourself."

I guess I have to congratulate them for writing anything, but what they did was kind of like saying, "here's a derringer pistol, Mr. Lincoln. Don't know if it's important or not, but you might want to read about it."

Some software companies are trying to react. I believe the wrenching re-organization that Adobe's putting itself through right now is in part a reaction to this change in the market. The re-org hasn't gotten much coverage -- in part because Adobe hasn't released many details, and in part because the press is obsessed with Google vs. Microsoft. But Adobe has now put Macromedia general managers in charge of most of its business units, displacing a lot of long-time Adobe veterans who are very bitter about being ousted two weeks before Christmas, despite turning in good profits. I've been getting messages from friends at Adobe who have been laid off recently, and all of them say they were pushed aside for Macromedia employees. "It's a reverse acquisition," one friend told me.

I personally think what Adobe's doing is grafting Macromedia's Internet knowledge and reflexes into a company that has been very focused on its successful packaged software franchises. It's going to be a painful integration process, but the fact that Adobe's willing to put itself through this tells you how important the change is. Better to go through agonizing change now than to lose the whole company in five years.

What does Microsoft do? In the old days, Microsoft's own extreme profitability made it straightforward for the company to win a particular market. Microsoft could spend money to bleed the competitor (for example, give away the browser vs. Netscape), while it worked behind the scenes to duplicate and then surpass the competitor's product. But the component software crowd doesn't want to take over Microsoft's revenue stream; it wants to destroy about 90% of it, and then can be very successful living off the remaining 10% or so. To co-opt their tactics, Microsoft would have to destroy most of its own revenue.

Here's a simplified example of what I mean: some of the component companies are developing competitors to the Office apps. A couple of examples are Writely and JotSpot's Tracker. Microsoft could fight them by trimming down Word and Excel into lightweight frameworks and inviting developers to extend them. The trouble is that you can't charge a traditional Word or Excel price for a basic framework; if you do, competing frameworks will beat you on price. And if you enable third parties to make the extensions, then they'll get any revenue that comes from extensions. I don't see how Microsoft could sell enough advertising on a Word service to make up the couple of hundred dollars in gross margin per user that it gets today from Office (and that it gets to collect again every time it upgrades Office).

The Ozzie memo seems to suggest that Microsoft will try to integrate across its products, to make them complete and interoperable in ways that will be very hard for the component crowd to copy. But that adds even more complexity to Microsoft's development process, which is already becoming famous for slowness. If you gather all the dinosaurs together into a herd, that doesn't stop the mice from eating their eggs.

I wonder if senior management at Microsoft sees the scenario this starkly. If so, a logical approach might be to make an all-out push to displace the Google search engine and take over all of Google's advertising business, to offset the coming loss of applications revenue. Will Microsoft suddenly offer to install free WiFi in every city in the world? Don't laugh; historically, when Microsoft felt truly threatened it was willing to take radical action. Years ago the standard assumption was that Gates and Ballmer utterly controlled Microsoft -- they held enough of the company's stock that they could ignore the other shareholders if they had to. I'm not sure if that's still true. Together the stock holdings of Gates and Ballmer have dropped to about 13% of the company. Microsoft execs hold another 14%, and Paul Allen has about 1%. Taken together, that's 28%. Is that enough to let company management make radical moves, even at the expense of short-term profits? I don't know. But I wouldn't bet against it.

The rebirth of IT? The other interesting potential impact was pointed out to me by a co-worker at Rubicon Consulting, Bruce La Fetra. Just as new software companies can become more efficient by working in the component world, companies can gain competitive advantage by aggressively using the new online services and open source components for their own in-house development. But doing so requires careful integration and support on the part of the IT staff. In other words, the atomization of software makes having a great IT department a competitive advantage.

How about that, maybe IT does matter after all.

Microsoft and the quest for the low-cost smartphone

Posted by

Andy

at

8:14 PM

The Register picked up an article from DigiTimes reporting that Microsoft's seeking bids to create a sub-$300 Windows Mobile smartphone.

At first the article made no sense to me because it's easy today to create a Windows Mobile or Palm Powered smartphone for less than $300. You use a chipset from TI, which combines the radio circuitry and processor in the same part. You can't doll up the device with a keyboard like the Treo, so you end up with a basic flip phone or candybar like the ones sold by the good people at GSPDA and Qool Labs.*

This works only with GSM phones (Cingular and TMobile in the US); if you want CDMA (Sprint or Verizon) your hardware costs more. And the costs for 3G phones are a lot higher.

I have a feeling it's lower-cost 3G smartphones that Microsoft is actually after. Most of the operators don't want to take on smartphones with anything less than 3G even today, and if Microsoft's looking ahead to future devices there's no reason to plan for anything other than 3G.

A 3G smartphone at $300 would sell for about $99-$150 after the operator subsidy, so Microsoft's trying to get its future smartphones down closer to mainstream phone price points.

What no one seems to be asking is why it's so important to do this. The assumption most people make is that by reaching "mainstream" price points you'll automatically get much higher sales. That's what the Register seems to believe:

"Symbian remains the world's most successful mobile operating system, almost entirely because Nokia has used it not only for high-end smart-phones but to drive mid-range feature-phones too."

Okay. But that happens only because Nokia's willing (at least for the moment) to eat several dollars per unit to put Symbian into phones bought by feature phone buyers: people who won't pay extra for an OS, and in fact don't even know Symbian is in their phones. Symbian is basically a big Nokia charity at the moment. Most other vendors are unwilling to subsidize Symbian like this, which is why Symbian sales outside Japan are almost totally dominated by Nokia.

Will Microsoft be able to get vendors to push its products the way Nokia does Symbian? Maybe with enough financial incentives. But I think the underlying problem is that the OS isn't adding enough value to drive large numbers of people to buy it, at any price. When we researched mobile device customers at PalmSource, we found lots of people who were willing to pay extra for devices that met their particular needs. Fix the product and the price problem will take care of itself.

Michael Gartenberg says he was very impressed by the preview he just got of the next version of Windows Mobile. I tend to trust Michael's judgment, so maybe there's hope.

__________

*If only some US operator had the wisdom to carry their products. Sigh.

At first the article made no sense to me because it's easy today to create a Windows Mobile or Palm Powered smartphone for less than $300. You use a chipset from TI, which combines the radio circuitry and processor in the same part. You can't doll up the device with a keyboard like the Treo, so you end up with a basic flip phone or candybar like the ones sold by the good people at GSPDA and Qool Labs.*

This works only with GSM phones (Cingular and TMobile in the US); if you want CDMA (Sprint or Verizon) your hardware costs more. And the costs for 3G phones are a lot higher.

I have a feeling it's lower-cost 3G smartphones that Microsoft is actually after. Most of the operators don't want to take on smartphones with anything less than 3G even today, and if Microsoft's looking ahead to future devices there's no reason to plan for anything other than 3G.

A 3G smartphone at $300 would sell for about $99-$150 after the operator subsidy, so Microsoft's trying to get its future smartphones down closer to mainstream phone price points.

What no one seems to be asking is why it's so important to do this. The assumption most people make is that by reaching "mainstream" price points you'll automatically get much higher sales. That's what the Register seems to believe:

"Symbian remains the world's most successful mobile operating system, almost entirely because Nokia has used it not only for high-end smart-phones but to drive mid-range feature-phones too."

Okay. But that happens only because Nokia's willing (at least for the moment) to eat several dollars per unit to put Symbian into phones bought by feature phone buyers: people who won't pay extra for an OS, and in fact don't even know Symbian is in their phones. Symbian is basically a big Nokia charity at the moment. Most other vendors are unwilling to subsidize Symbian like this, which is why Symbian sales outside Japan are almost totally dominated by Nokia.

Will Microsoft be able to get vendors to push its products the way Nokia does Symbian? Maybe with enough financial incentives. But I think the underlying problem is that the OS isn't adding enough value to drive large numbers of people to buy it, at any price. When we researched mobile device customers at PalmSource, we found lots of people who were willing to pay extra for devices that met their particular needs. Fix the product and the price problem will take care of itself.

Michael Gartenberg says he was very impressed by the preview he just got of the next version of Windows Mobile. I tend to trust Michael's judgment, so maybe there's hope.

__________

*If only some US operator had the wisdom to carry their products. Sigh.

Google to sell thin client computers?

Posted by

Andy

at

2:01 PM

There was an interesting little tidbit buried deep in a recent NY Times story on Microsoft and software as a service:

"For the last few months, Google has talked with Wyse Technology, a maker of so-called thin-client computers (without hard drives). The discussions are focused on a $200 Google-branded machine that would likely be marketed in cooperation with telecommunications companies in markets like China and India, where home PC's are less common, said John Kish, chief executive of Wyse."

Google sells servers, and there has been speculation about client hardware since at least early this year. But this is the first firm report I've seen.

I'm not a big fan of thin clients; local storage is very cheap, and much higher bandwidth than even the fastest network. But I presume the most significant thinness in a Google client would be the absence of a Microsoft operating system.

Hey, Google, while you're at it you need to do a mobile client too.

"For the last few months, Google has talked with Wyse Technology, a maker of so-called thin-client computers (without hard drives). The discussions are focused on a $200 Google-branded machine that would likely be marketed in cooperation with telecommunications companies in markets like China and India, where home PC's are less common, said John Kish, chief executive of Wyse."

Google sells servers, and there has been speculation about client hardware since at least early this year. But this is the first firm report I've seen.

I'm not a big fan of thin clients; local storage is very cheap, and much higher bandwidth than even the fastest network. But I presume the most significant thinness in a Google client would be the absence of a Microsoft operating system.

Hey, Google, while you're at it you need to do a mobile client too.

Revisionist history

Posted by

Andy

at

12:13 AM

I'm working on a posting about software as a service. During my research, I reviewed Microsoft's recent executive memos on the subject. As always happens when I read Microsoft's stuff, I was struck by the loving craftsmanship that goes into those documents. Although these are supposedly private internal memos, I believe they're written with the expectation that they will leak. Microsoft slips little bits of revisionist history into the memos. Since the history notes are incidental to the main message of the memo, most people don't even think to question them. It's very effective PR. Here are two examples. Let's watch the message masters at work:

Ray Ozzie wrote: "In 1990, there was actually a question about whether the graphical user interface had merit. Apple amongst others valiantly tried to convince the market of the GUI's broad benefits, but the non-GUI Lotus 1-2-3 and WordPerfect had significant momentum. But Microsoft recognized the GUI's transformative potential, and committed the organization to pursuit of the dream – through investment in applications, platform and tools."

Reality: By 1990 everyone who understood computers, and I mean everyone, agreed that the graphical interface had merit. Everyone also agreed that Microsoft's implementation of it sucked. Meanwhile Microsoft had tried and failed to displace Lotus 1-2-3 and WordPerfect from leadership in the DOS world because they were established standards. One thing Microsoft recognized about the GUI was its transformative potential to break these software standards and replace them with its own Word and Excel.

And by the way, congratulations to Microsoft for figuring that out. If Lotus and WordPerfect had been more attentive to the shift to GUIs, I don't think Microsoft could have displaced them. That's an important lesson for today's software companies looking at the new development paradigm on the web.

Bill Gates wrote: "Microsoft has always had to anticipate changes in the software business and seize the opportunity to lead."

Wow. Exactly which changes did Microsoft anticipate and lead, as opposed to respond to and co-opt?

But seize is the right word. I like that one a lot.

Ray Ozzie wrote: "In 1990, there was actually a question about whether the graphical user interface had merit. Apple amongst others valiantly tried to convince the market of the GUI's broad benefits, but the non-GUI Lotus 1-2-3 and WordPerfect had significant momentum. But Microsoft recognized the GUI's transformative potential, and committed the organization to pursuit of the dream – through investment in applications, platform and tools."

Reality: By 1990 everyone who understood computers, and I mean everyone, agreed that the graphical interface had merit. Everyone also agreed that Microsoft's implementation of it sucked. Meanwhile Microsoft had tried and failed to displace Lotus 1-2-3 and WordPerfect from leadership in the DOS world because they were established standards. One thing Microsoft recognized about the GUI was its transformative potential to break these software standards and replace them with its own Word and Excel.

And by the way, congratulations to Microsoft for figuring that out. If Lotus and WordPerfect had been more attentive to the shift to GUIs, I don't think Microsoft could have displaced them. That's an important lesson for today's software companies looking at the new development paradigm on the web.

Bill Gates wrote: "Microsoft has always had to anticipate changes in the software business and seize the opportunity to lead."

Wow. Exactly which changes did Microsoft anticipate and lead, as opposed to respond to and co-opt?

But seize is the right word. I like that one a lot.

Bring on the Singularity!

Posted by

Andy

at

6:13 PM

It's philosophy time. If you're looking for comments on the latest smartphone, you can safely skip this post.

One of the nice side effects of doing a job search in Silicon Valley is that you get to step back and take a broader view of the industry. A friend calls being laid off the "modern sabbatical," because this is the only opportunity most of us have for multi-month time off from work.

It's not really a sabbatical, of course. Unless you're supremely self-confident, it's a time of uncertainty. And instead of going on vacation you spend most of your days networking; having breakfast and lunch and coffee with all your old co-workers and other contacts. (Speaking of coffee shops, the drinking chocolate thing from Starbucks is obscenely expensive but tastes really good.)

In addition to the exotic drinks, the networking itself is very interesting because you get to hear what everyone else is working on. You get a glimpse of the big picture, and that's what I want to talk about tonight.

To work in Silicon Valley today is to be suspended between euphoria and despair.

The euphoria comes from all the cool opportunities that are unfolding around us. The despair comes from the fear that it's all going to dry up and blow away in the next ten years.

Let's talk about the euphoria first. The number of interesting, potentially useful business ideas floating around in the Valley right now is remarkable. As I went around brainstorming with friends, it seemed like every other person had a cool new idea or was talking with someone who had a promising project. Unlike the bubble years, a lot of these ideas seem (to me, at least) to be much more grounded in reality, and to have a better chance of making money.

The success of Google and Yahoo has put a vibrant economic engine at the center of Silicon Valley, and the competition between them and Microsoft is creating a strong market for new startups. In the late 1990s, the VCs loved funding networking startups because if they were any good, Cisco would buy them before they even went public. It feels like the same thing is happening today with Internet startups, except this time there are several companies competing to do the buying.

And then there's Apple, which is reaching a scale where it can make significant investments in…something. I don't know what. I personally think it's going to be the next great consumer electronics company, but we'll see. What I'm pretty sure of is that if you give Steve Jobs this much money and momentum, he's not going to sit back on his haunches and be satisfied.

The overall feeling you get is one of imminence, that great things are in the process of happening, even if you can't always see exactly what they are. The tech industry is so complex, and technologies are changing so quickly, that I don't think it's possible for any one person to understand where it's all going. But it feels like something big is just over the horizon, something that'll reset a lot of expectations and create a lot of new opportunities.

Perhaps we're all just breathing our own exhaust fumes, but the glimpses of that something that I got during my search seem a lot more genuine, a lot more practical, than they did during the bubble. It's an exciting time to be in Silicon Valley.

Then there's the despair. In two words, China and India.

I don't know anyone in Silicon Valley who dislikes Chinese or Indian people as a group (a lot of us are Chinese and/or Indian). But there's a widespread fear of the salary levels in China and India. I saw this first-hand at PalmSource, when we acquired a Chinese company that makes Linux software. Their engineers were…pretty darned good. Not as experienced on average as engineers in Silicon Valley, but bright and competent and very energetic. Nice people. And very happy to work for one tenth the price of an American engineer.

That's right, for the same price as a single engineer in Bay Area, you can hire ten of them in Nanjing, China.

Now, salary levels are rising in China, so the gap will narrow. I wouldn't be surprised if it has already changed to maybe eight to one. But even if it went to five to one, there's no way the average engineer in Silicon Valley can be five times as productive as the average engineer in China. No freaking way, folks. Not possible.

Today, there's still a skills and experience gap between the US and China in software. Most software managers over there don't know how to organize and manage major development projects, or how to do quality control; and their user interface work is pathetic. But that can all be learned. If you look out a decade or so, the trends are wonderful for people in China and India, and I'm honestly very happy for them. They deserve a chance to make better lives for themselves. But those same trends are very scary for Silicon Valley.

Embracing Asia

One way for today's tech companies to deal with this is to co-opt the emerging economies, to move work to the low-cost countries and get those cost advantages for themselves. There are some great examples of companies that have already done this. One is Logitech.

Did you say Logitech? They make…what, mice and keyboards? Most of Silicon Valley ignores them because their markets aren't sexy and they don't publicize themselves a lot. But when I look at Logitech, here's what I see: A company owned by the Swiss and headquartered in Silicon Valley (two of the highest-cost places on Earth) that makes $50 commodity hardware and sells it at a very nice profit.

Almost no one in Silicon Valley knows how to do that. In fact, the conventional wisdom is that it's impossible. And yet Logitech grows steadily, year after year.

One of their secrets is that they got into China long ago, years before it was fashionable. They don't outsource manufacturing to China, they have their own manufacturing located there. This lets Logitech go head to head with companies that want to fight them on price.

Another example is Nokia. The image of Nokia phones is that they're high-end and kind of ritzy, but when I was studying the mobile industry at Palm, I was surprised to see how much of Nokia's volume came at the low end of their product lines. Like Wal-Mart does in retailing, Nokia leverages its huge global manufacturing volumes to make phones cheaper than anyone else. It's the sales leader and also the low-cost producer, at least among the name brands.

So there's hope that Silicon Valley's tech companies, and their senior management, may survive by embracing the cost advantages of operating in China and India. But that's little comfort to engineers being told that their jobs are moving to Bangalore. And it doesn't help the small startups that don't have the scale to work across continents.