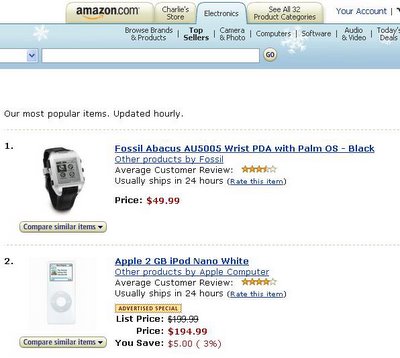

The image above was sent to me today by a former PalmSource colleague. Yes, that's a list of Amazon's best-selling consumer electronics products.

And yes, that's the Fossil Palm OS watch at #1, outselling the iPod Nano.

The Fossil saga is one of the saddest stories in the licensing of Palm OS. Fossil had terrible manufacturing problems with the first generation product, and so the company became cautious about the market. I think the underwhelming performance of its Microsoft Spot watches didn't help either. When the second generation Palm OS product wasn't an immediate runaway success, Fossil backed away from the market entirely. They killed some achingly great products that were in development, stuff that I think would have gone over very well. And now here's that original Palm OS watch on top of the sales chart.

You don't want to read too much into this; the Fossil watch is on closeout, and ridiculously discounted. And being on the top of a sales list at one moment in time is not the same as being a perennial best-seller.

But still…this is the holiday buying season, and that's a pretty remarkable sales ranking. It makes me wonder, was the Fossil product a dumb idea? Or was it just the wrong price point, at the wrong time?

It's very fashionable these days to dismiss the mobile data market, or to think of it as just a phone phenomenon. But we're very, very, very early in the evolution of mobile data -- equivalent to where PCs were when VisiCalc came out. We haven't even come close to exploring the price points, form factors, and software capabilities that mobile devices will develop in the next 10 years. A lot of experimental products are going to fail, but some are going to be successes. Anyone who says the market is played out, or that a particular form factor will “never” succeed, just doesn't understand the big picture.

I don't know that an electronic calendar watch is ever going to be on everyone's wrist, but wait another five years and you'll be able to make a pretty powerful wristwatch-sized data device profitably for $50. And I'll bet you somebody's going to do something very cool with it.

(PS: Just so no one thinks I'm trying to pull a fast one, I should let you know that the Amazon list changes hourly, and the sales ranking of the Fossil watch seems to jump around a lot. But the fact that it's even in the top 25 impresses me.)